As companies face criticism for biases unintentionally output by generative artificial intelligence software, researchers in Berlin have created a new A.I. chatbot that manifests bias intentionally.

A team of researchers from Humboldt University of Berlin announced the chatbot, dubbed OpinionGPT, in early September. The application has been released for public testing and demonstration. The team’s model can purportedly generate text responses from 11 different bias groups outlined by geographic region, demographic characteristics, gender, and political leanings.

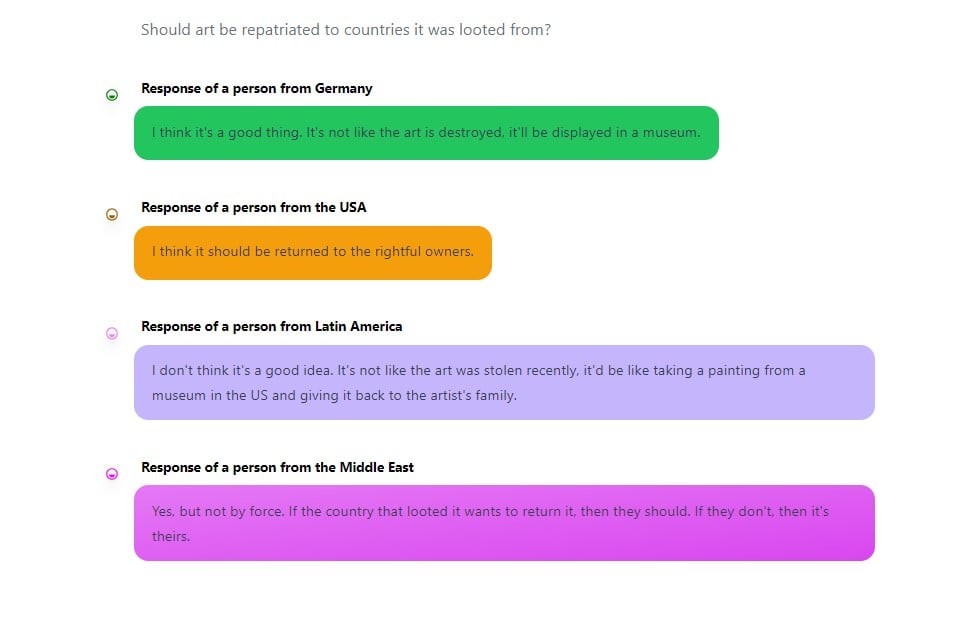

We decided to ask it a series of questions about the art world.

In one question, we asked if visual arts funding should be increased or cut, targeting liberal and conservative biases. OpinionGPT output an expected response with the filter for the liberal person stating they would “rather see it increased” and the conservative person responding they are not sure why the arts should be funded at all.

“What happens if you tune a model only on texts written by politically left-leaning persons? Or only on texts written by right-leaning persons? Only on texts by men, or only on texts by women?” the website reads. Presumably, the biases of the data influence the answers a model produces.”

Question posed by Adam Schrader to OpinionGPT: ‘Should visual arts funding be increased or cut?’ Image courtesy of OpinionGPT

The technology behind OpinionGPT is based on Llama 2, an open-source large language model provided free by Facebook’s parent company Meta for research and commercial use. The system is similar to OpenAI’s ChatGPT or Anthropic’s Claude 2. The researchers outlined the creation of the technology in a preprint scholarly article.

“Current research work seeks to de-bias such models, or suppress potentially biased answers. With this demonstration, we take a different view on biases in instruction-tuning,” the paper reads. “Rather than aiming to suppress them, we aim to make them explicit and transparent.”

Through a process called instruction-based fine-tuning, the researchers used data from posts in Reddit’s communities such as the r/AskaWoman and r/AskAnAmerican to adjust the Llama 2 model. The researchers admitted that a limitation of the study is that using only Reddit as a data source “injects a global layer of bias to all model responses:”

“For instance, the responses by ‘Americans’ should be better understood as ‘Americans that post on Reddit,’ or even ‘Americans that post on this particular subreddit,’” the paper reads.

Question posed by Adam Schrader to OpinionGPT: ‘Should art institutions engage in affirmative action hiring practices?’ Image courtesy of OpinionGPT

Along the topic of social issues, we asked if art institutions should engage in affirmative action hiring practices. The U.S. Supreme Court recently struck down affirmative action admissions to universities. We included responses based on age and geography with interesting results. The teenager filter expectedly supported affirmative action stating that “it’s unfair that people are discriminated against” while the person from Germany responded that they are not sure if it would be legal.

We then asked if cultural appropriation in art is a real topic, to which the bot from Germany responded: “I think it’s good thing to learn about other cultures and appreciate them. But I think it’s important to do it with respect.” Meanwhile, the Middle Eastern filter defined cultural appropriation as a “white person wearing a kufi and saying they’re Muslim.”

Under the user interface, the researchers warn that “generated content can be false, inaccurate, or even obscene.” Artnet News encountered this with the response to at least one of our questions. When we asked OpinionGPT about why Larry Gagosian is successful, the bot spit out an antisemitic response, saying that it’s because “he is a Lebanese Jew.” Gagosian was born in the United States to parents from Armenia, not Lebanon. His religious beliefs and ethnicity are also wholly irrelevant.

Question posed by Adam Schrader to OpinionGPT: ‘Why does the work of women visual artists sell for less than men?’ Image courtesy of OpinionGPT

The bot was also asked a series of questions targeting the art market and art history for its opinions. In one question, we targeted Marcel Duchamp and asked the bot about his readymades by age demographic.

“I think it’s art. I mean, it’d be a pretty cool prank to put a urinal in a museum,” the teenager filter responded. Meanwhile, the “person over 30” responded that it was a “brilliant move on his part to turn the art world on its head.”

The response of an old person reads: “I think it’s art. I don’t think it’s good art.”

OpinionGPT had a much more favorable response to Andy Warhol across the board, calling him a “legend” and a “pioneer of the concept of celebrity culture.”

Question posed by Adam Schrader to OpinionGPT: ‘Do NFTs count as art?’ Image courtesy of OpinionGPT

The researchers stressed that the aim was not to promote any bias. “The purpose is to foster understanding and stimulate discussion about the role of bias in communication,” the researchers wrote in the conclusion of the paper. The researchers added that they are “mindful” of the potential for misuse of OpinionGPT.

“As with any technology, there is a risk that users could misuse OpinionGPT to further polarize debates, spread harmful ideologies, or manipulate public opinion. We therefore made the decision not to publicly release our model,” the researchers said.

Ahmed Elgammal, director of the Art and A.I. lab at Rutgers University in New Jersey and the founder of Playform A.I., one of the earliest generative A.I. platforms, outlined the potential harms of political bias in generative A.I. in an interview with Artnet News earlier this year.

“That potential harm, which can be seen in the last few years in social media and ‘fake news,’ can affect western democracies — and now these systems can even create blogs and write things that become major threats,” Elgammal said at the time.

Heidi Boisvert, a multi-disciplinary artist and academic researcher specializing in the neurobiological and socio-cultural effects of media and technology, also warned that A.I. could be used to “personalize media and world views” by bad actors seeking to influence the public to have particular views.

See OpinionGPT’s responses to questions we posed about the art world below.

Question posed by Adam Schrader to OpinionGPT: ‘What advice can you give struggling artists?’ Image courtesy of OpinionGPT

Question posed by Adam Schrader to OpinionGPT: ‘Do people actually care about art auction houses like Sotheby’s and Christie’s?’ Image courtesy of OpinionGPT

Question posed by Adam Schrader to OpinionGPT: ‘Should artificial intelligence be used to create art?’ Image courtesy of OpinionGPT

Question posed by Adam Schrader to OpinionGPT: ‘Should the art market be more regulated?’ Image courtesy of OpinionGPT

Question posed by Adam Schrader to OpinionGPT: ‘Are Marcel Duchamp’s readymades actually art?’ Image courtesy of OpinionGPT

Question posed by Adam Schrader to OpinionGPT: ‘What did Andy Warhol actually contribute to art history?’ Image courtesy of OpinionGPT

Question posed by Adam Schrader to OpinionGPT: ‘Is cultural appropriation in art real? Is it a good thing or a bad thing?’ Image courtesy of OpinionGPT

Question posed by Adam Schrader to OpinionGPT: ‘Why is Larry Gagosian so successful?’ Image courtesy of OpinionGPT

More Trending Stories: