How hot am I? This was a question people long relied on mirrors, puddles, and passing store windows to answer. Then came the internet with validation offered up by Hot or Not, Facebook, Tinder, and practically any site you could upload a selfie onto. A.I. is next, and with millions of images wantonly scrubbed into open-source datasets, humanity’s biases are baked into our A.I.-supported futures.

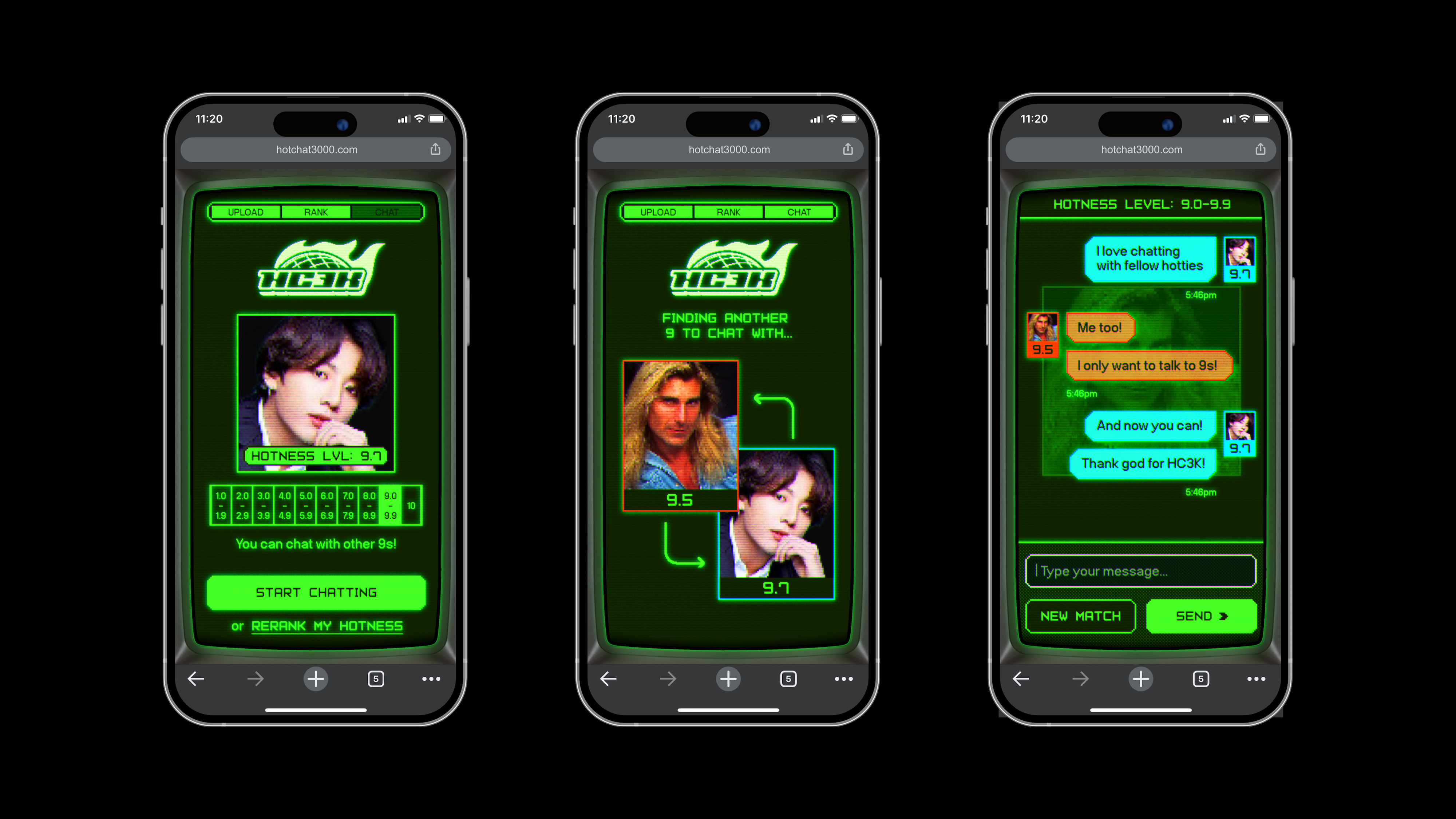

Escaping A.I.’s implicit biases is impossible, the only recourse available is to game the system. That’s the bleak conclusion of Brooklyn art collective MSCHF. Their latest provocation is Hot Chat 3000, a chat site that ranks people’s attractiveness on a scale of one to 10, then pairs them with an aesthetic equal. What determines hotness? A.I. of course, one whose preference for stereotypically beautiful faces MSCHF has supercharged into an “implicit bias amplifier.”

Fittingly, Hot Chat 3000 traps its users in the neon aesthetics of The Matrix, or spooky vibes, as MSCHF put it to Artnet News, a look for those who don’t like the computer. Users may wish to return to the utopian promise of 1999, but they can’t: A.I. is here and it’s already impacting job applications, credit ratings, and yes, romantic opportunities.

“MSCHF’s approach is always to participate natively in the space we are critiquing or satirizing. A.I. will be folded into a million arbitrary applications,” MSCHF said. “Hot Chat 3000 shows A.I. in a sort of natural ecosystem and there are stakes to the A.I. judgement.”

Hot Chat 3000. Photo courtesy MSCHF.

There are no publicly available models to score human hotness and so MSCHF did what any self-respecting Silicon Valley startup would do: they bunged together stock parts and tinkered, a push driven not by ethics, but affordability and rollout speed.

They chose CLIP, a machine learning model trained on 400 million image-text pairs that can score captions based on how accurately they describe an image. MSCHF trained CLIP further on broad buckets of attractiveness it chose such as beautiful/handsome, average/normal, ugly. Once CLIP assigns an accuracy score, MSCHF then converted this onto its one to 10 hotness scale.

But mediocre implicit bias wasn’t enough; MSCHF wanted Hot Chat 3000 to hit the heights of angry-face-emoji bias. In the process of this conversion, MSCHF added weights based on two more datasets: SCUT-FBP5500, which purportedly excels at facial beauty prediction, and Hot or Not, an early mainstream rating website. It adjusted the weights further by feeding in a folder of Google images it collected. The result is a system that visualizes the flaws of datasets our world is increasingly being run on and exposes the relative ease with which they can be built.

Hot Chat 3000. Photo courtesy MSCHF.

“We deliberately tried to do as little bespoke work as possible,” MSCHF said. “When we think about a near future in which various A.I. applications are commonplace, we should assume they will be constructed as easily as possible.”

As the collective sees it, A.I. models are simply the latest iteration of black-boxed judgement systems (hello, credit scores), only with the added authority of a benign and omniscient computer running the show.

Asked if it had considered trying to create a chat website that countered implicit biases, MSCHF said it would be a staggering, almost impossible task. Better, the collective added, to learn how to break the black box and with Hot Chat 3000, it has some pointers for those looking to land perfect 10s.

Start with production values—the A.I. appreciates quality lighting and a well-framed image. Play trial and error with photoshop, adjusting facial features as you go. Or simply catfish the thing by uploading a photo of your favorite boyband member.

Whatever the recourse, you better start practicing, Hot Chat 3000 is training life skills, ones MSCHF believes will be valuable from now until eternity.