Ever since the rise of powerful AI image generators took the internet by storm last year, artists have been speaking out about the threat to their livelihoods, especially those whose work has been used to train the models without prior consent. Recently, their frustrations even escalated into a class-action lawsuit.

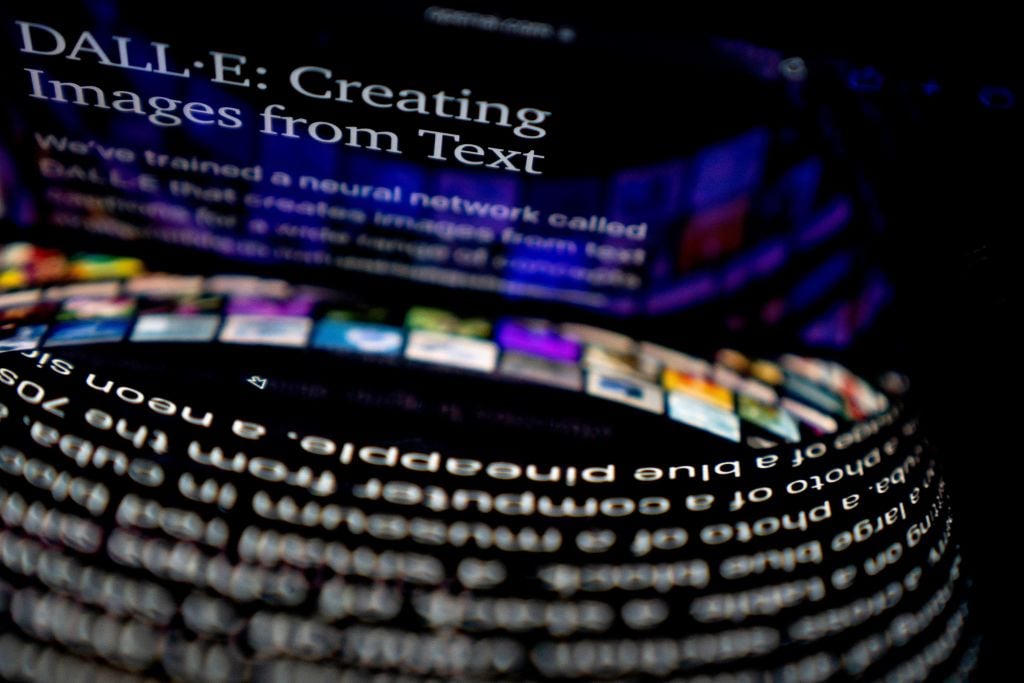

You only so much as have to type an artist’s name into a prompt to see how easily big platforms like OpenAI’s DALL-E, Midjourney, and Stable Diffusion can ape an individual’s style, making it readily available for anyone to reuse and remix.

Now, researchers have developed a new tool intended to protect artists from this kind of plagiarism. Called “Glaze,” the technology works by “cloaking” images—introducing subtle changes to the defining characteristics of the style such as brushstroke, palette, texture or use of shadow. The differences, however, are minimal to the naked human eye.

It was developed by a team of computer scientists at the University of Chicago associated with the college’s research group SAND Lab. The idea was inspired by Fawkes, an algorithm built in 2020 by SAND Lab which “cloaks” personal photographs so that they can’t be used as data for facial recognition models. This latest project was prompted by artists reaching out to the lab imploring them to help, after which the lab surveyed over 1,000 artists to assess the scale of the problem.

In order to adapt the Fawkes concept to artwork, “we had to devise a way where you basically separate out the stylistic features from the image from the object, and only try to disrupt the style feature,” explained Shawn Shan, a graduate student who co-authored the study.

In the end, the researchers realized they could pit a closely related form of AI known as “style transfer” against the AI image generators.

“Style transfer” models recreate an existing image in a different style without changing the content. Glaze therefore borrows this algorithm to identify which features would need to be changed in order to change the style of a work, and then “perturbs” just those features the minimum amount necessary to confuse generative models.

“We’re using that information to come back to attack the model and mislead it into recognizing a different style from what the art actually uses,” explained Ben Zhao, one of the professors that led the research.

The efficacy of the approach was tested by training a generative model with the cloaked images and then asking it to output work in the same artist’s style. According to the researchers, these images were much less successful forgeries than had been observed when the input images were not cloaked. These results were also evaluated by a focus group of artists, with over 90 percent subsequently showing interest in using the “cloaking” software on their own work.

The model was, notably, equally confused when it was trained on a mix of cloaked and uncloaked images by the same artist, giving hope to artists whose uncloaked work has already been used to train existing generative models

The research has been published in the paper “Protecting Artists from Style Mimicry by Text-to-Image Models.” SAND Lab are currently working on making the software available for download.