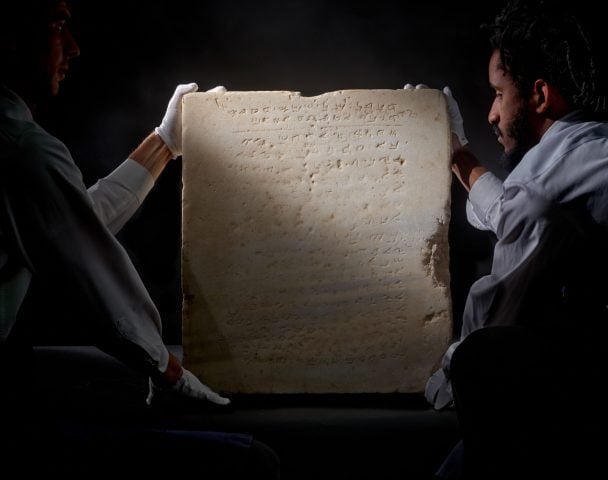

In recent months, strange collages have been popping up on Twitter feeds featuring improbable and occasionally gruesome images that each represent some kind of farcical prompt. Examples include “the demogorgon from Stranger Things holding a basketball,” “plague doctor onlyfans,” and “archaeologists discovering a plastic chair.”

Many will recognize these posts as the result of text-to-image generation, the latest craze in machine learning, which gained mainstream attention earlier this year with artificial intelligence company OpenAI’s announcement of an image generator called DALL-E 2. Some of the pictures it became known for include the avocado armchair and an astronaut riding a horse through space.

This year has also seen the arrivals of Midjourney, from a lab of the same name, and Google’s version, Imagen. Some possible practical uses include producing specific images to illustrate news items or creating cheap prototypes during design research and development.

It’s plain to see that the latest influx of viral images on Twitter are not nearly as impressively rendered as those that accompanied the announcement of these official releases, some of which are photorealistic. These much scrappier images with barely discernible faces have been produced by the replica Craiyon, known until recently as DALL-E Mini.

A horde of these open source unofficial text-to-image algorithms have surfaced online—including the Russian-language ruDALLE, Disco Diffusion, and Majesty Diffusion—produced by programmers in their spare time as an impatient response to the reluctance of tech giants to make their algorithms public.

OpenAI has explained that before it does so, it plans to study DALL-E 2’s limitations and the safety mitigations that would be necessary to make sure it’s deployed responsibly.

Beyond the obvious meme-making potential of these open source replicas, they have also proved a huge hit with a growing community of self-identifying, largely self-taught A.I. artists.

Michael Carychao, whose A.I. generations have won him 26,800 followers on TikTok, had previously worked in games, VFX, V.R., and animation when he came across an open source algorithm trained by the programmer and AI artist Katherine Crowson. “Instantly I dropped everything and have been making A.I. art every day since,” he said.

Much of the appeal seems to be the unprecedented quantity of images produced at high speed that generative A.I. can offer an artist looking to experiment with any idea that pops into their head.

“The most surprising things can be conjured up in seconds,” says Carychao, who also notes that since becoming a member of this community his social media feeds are filled with “quirky, beautiful, curated visions that blend old and new styles in provocative ways; it’s like going to a dozen galleries a day.”

OpenAI, Google, and Midjourney are justified in waiting to unleash the power of their creations, the full implications of which are far from apparent, but the decision has been controversial and their waitlists have created a huge gap in access.

The concept of making code open source for these artists is not only about barriers to entry but is a fundamental value of democratic digital production. Members of the community dedicate a huge amount of their time to making improvements to the algorithms currently available via Google Colab, congregating on the relevant Discord server to crowdsource their expertise.

“Every week, new models and new techniques appear, and what is perceived as good quality is ever-changing,” said Apolinário Passos, who set up the platform multimodal.art as a comprehensive guide to the scene. He is working to make the tools accessible to non-coders with the newly developed MindsEye interface that allows users to pilot different available algorithms.

Artists hoping to make use of the current resources available for producing A.I. art are best off being able to make at least some sense of Python and having access to a decent GPU. Aside from these hurdles, however, the tools have opened up digital art production to many more aspiring creatives and proved to be a great leveler in terms of artistic skill.

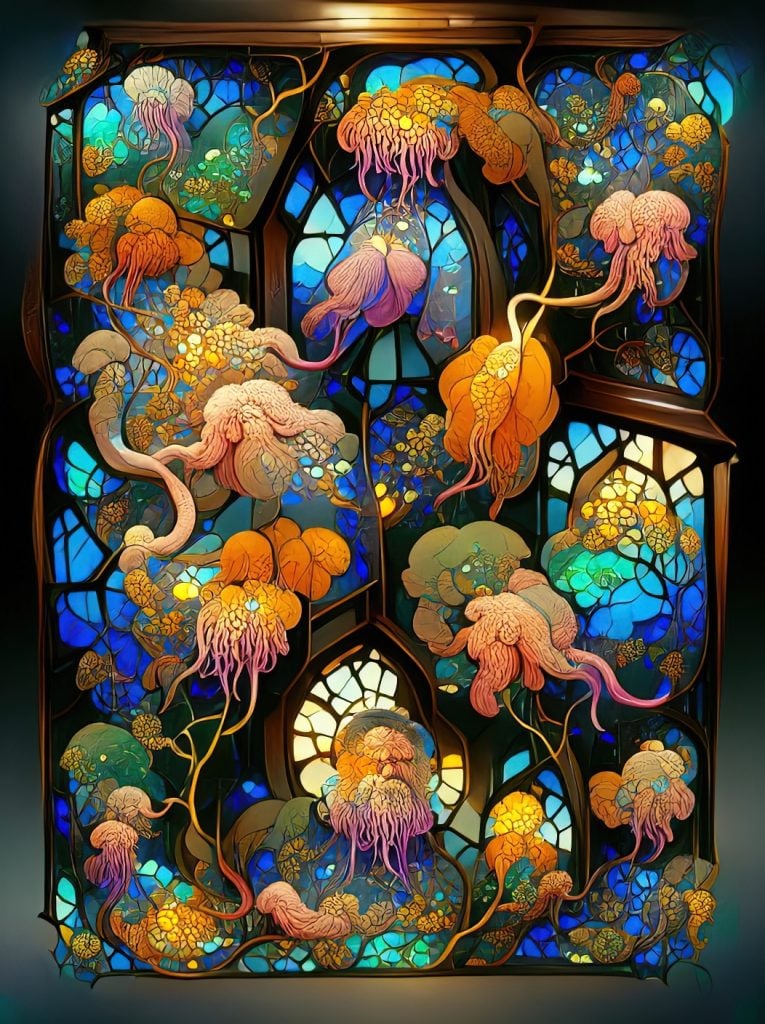

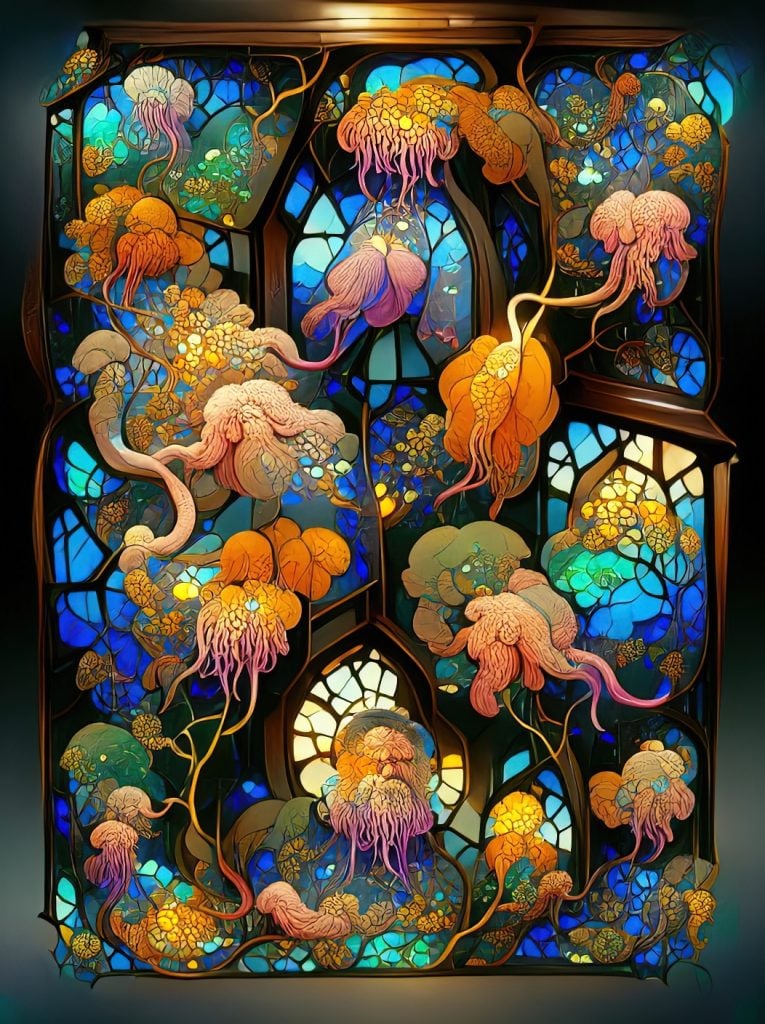

Unlimited Dream Co., Stained Glass (2022). Courtesy of Unlimited Dream Co.

According to London-based artist Unlimited Dream Co., who produces lyrical, semi-abstracted dreamlike compositions, the best comparison for text-to-image generation is the advent of photography in terms of its democratizing potential. It gives “anyone the ability to create perfect representations of anything they can imagine just by writing a sentence.”

Writing this sentence, technically known as “prompt engineering,” may be the key skill that replaces the artist’s hand. These carefully worded instructions can be seen as an interface between ideas floating in an artist’s head and the final images that show up on screen.

For artists, a crude command like “the demogorgon from Stranger Things holding a basketball” is unlikely to suffice. Instead, sophisticated prompt engineering requires adding in stylistic references to mediums, like Polaroid, stained glass or drawing, and to movements or makers.

The label of the popular 3D creation tool Unreal Engine, for example, cues the algorithm to output images that are more hyperrealistic.

The names of artists or designers will also produce the desired effects. The prompt “Barbara Hepworth” summons her trademark smoothly modeled, bulbous shapes with open cavities. This raises the question of what it means for a traditional artist to spend a lifetime cultivating a style that can be aped in just a few seconds.

Unlimited Dream Co, said his abstract compositions explore the contrasts this makes possible, for example, he might “mix Brutalist architecture with intricate Arts and Crafts patterns or organic flowing shapes made of hard plastic.”

“AI is being trained on the collective works of humanity and in my opinion that makes it our collective inheritance,” according to Carychao. “We can co-create with the greats of the past, we can riff with our ancestors, and future creators will be able to riff with the bodies of work we leave behind.”

This mode of creating—dubbed the “Recombinant Art” movement by creative technologist and writer Bakz T. Future—reflects our digital age, in which vast troves of context-free content is endlessly reordered and reused. It makes sense that our mode of creation might start to mimic our mode of consumption.

“The conjuring of thoughts into visuals enables the exploration of creativity,” said Passos. “It can be a powerful tool for new artists to figure out that they are artists.”