Art World

‘This Is the Project of a More Just World’: Trevor Paglen on Making Art That Shows Alternative Realities

The artist talks about his long-standing interests in AI, invisible networks, and government surveillance.

The artist talks about his long-standing interests in AI, invisible networks, and government surveillance.

Brian Boucher

One of the art world’s more intrepid figures, Trevor Paglen has ventured near unmapped prisons where terrorism suspects are subjected to brutal interrogations. He’s also gone to sites like Area 51, a secretive military base in the desert of Nevada, widely thought to be a testing ground for experimental aircraft, in order to take photographs that explore the edge of what is visible.

Paglen’s preoccupations include the physical structure of the Internet and the extent to which it allows governments to spy on their citizens, and the possibilities we can find to wriggle free of those spying efforts. One might think of many of his projects as “watching the watchers,” but Paglen himself has said that he prefers to think of his body of work as an investigation into what invisibility looks like.

Trevor Paglen’s The Salt Pit, Northeast of Kabul, Afghanistan (2006). Courtesy of the artist and Metro Pictures, New York.

Paglen’s work has variously involved a satellite that orbits the Earth, serving no military, surveillance, or communications purpose, sent aloft only to reflect light back to Earth; a team of scuba divers who mapped the fiber-optic cables that transmit data to and from computers and telephones on distant continents—information that’s swept up in government surveillance efforts; and a piece of computer circuitry standing inside a Plexiglas box in an art gallery, where it creates a wireless network for gallery visitors to use, and one whose traffic is protected from surveillance efforts with open-source anonymizing software.

Paglen’s art has earned him the MacArthur Foundation’s “genius” grant, which he won in October 2017, as well as numerous international museum exhibitions. His work is housed in public collections ranging from New York’s Museum of Modern Art to the Victoria and Albert Museum in London and the National Gallery of Victoria, Melbourne. Paglen, a Maryland native, received an MFA at the Art Institute of Chicago, as well as a PhD in geography at Berkeley—a field he describes as a way to study the mapping of power.

Paglen spoke to artnet News about his eerie recent photographs that show pictures drawn by computers; the ways that he enlists visibility and invisibility to different purposes in varying images; and how he’s a fan of Black Mirror.

Trevor Paglen’s Octopus (Corpus: From the Depths), “Adversarially Evolved Hallucination” (2017). Image courtesy of the artist and Metro Pictures, New York.

Let’s start off by talking about the work that you’re doing with machine learning and artificial intelligence. Your most recent show at Metro Pictures, “A Study of Invisible Images,” included a number of photographs created by asking artificial intelligence programs to create images of various subjects—comets, vampires, or rainbows, for example. Were those works at all intended to remove the artist’s hand from the production of the work?

Actually, when you look under the hood at how AI is actually done, it requires tremendous amounts of human labor. To create those images you need massive training sets. In my case, I need hundreds, or even thousands, of images to create a training set that I use to make one of these pieces. You assemble those images and then label them and tag them in order to teach the AI what these images are.

AI systems are portrayed in pop culture as objective and unbiased, but that couldn’t be further from the truth. All sorts of human biases are built into machine learning systems. They can come from biases of the primarily white and male Silicon Valley developers, or from biases that are built into the training data you’re using. I’m embracing that subjectivity, building training sets based on things like literature and metaphor that are not useful in terms of capitalism or policing, the normal kinds of things you use artificial intelligence for. Instead, I’m building training sets that are a meta-commentary on the idea of training sets themselves.

Also, we’re generating tens of thousands of images at least for each one that I select and develop into an artwork. One of the points of those artworks for me is showing the degree to which metaphors and subjectivities and highly specific forms of common sense are built into these systems. So it’s not outsourcing the making of the art—you’re just using a very different set of tools to make it. It’s more like Sol LeWitt than you imagine! You’re setting up elaborate sets of rules, and the images you’re putting out are essentially the output of that system.

Trevor Paglen’s Rainbow (Corpus: Omens and Predators), “Adversarially Evolved Hallucination” (2017). Courtesy of the artist and Metro Pictures, New York.

Okay, so those are the rules that create the images. How did you arrive at the subjects of the images themselves, these rainbows and vampires?

A very typical application of machine learning is to build imaging systems that recognize objects. In a very simple example, you could build a neural network that could recognize all of the objects in your kitchen. To build that, you’d make a list of all the objects in your kitchen, and then you need thousands of images of those items. And you put this whole corpus, or training set, together, and then you feed that whole thing to the neural network, and you say, “Okay, AI, I give you all of these images, and you’re going to figure out what the difference is between a banana and a knife and a fork and plate.” It learns the difference, which is really just statistics—what images are statistically more likely to be pictures of knives, or forks, or bananas, and then you set up a webcam and point it around your kitchen, and the AI will say, “There’s a banana, a knife, and a fork.”

That is a very simple example, whereas in real life you would build something like that for the back-end of Facebook, and when you upload a picture of you, Facebook will say, “There’s a picture of Brian drinking a Coke, wearing a Calvin Klein shirt,” so it can learn about you from the images that you post. Another application would be a self-driving car that can drive around and say, “I can recognize a stop sign, and there’s a tree, a person,” etc. What I do is build a taxonomy that instead of something like objects in your kitchen or things a car would see on the street or what Facebook wants to know about Brian, I’ll build a taxonomy based on something like literature or philosophy. I have one that’s called The Interpretation of Dreams. It goes through Freud’s book and picks up all of the symbols that Freud thinks are important about interpreting dreams, for example injections, puffy faces, scabby throats, windows, ballrooms, etc. When you point that camera around your kitchen, it no longer sees bananas and plates and spoons. It sees puffy faces, windows, and mothers. It sees the world through the eyes of psychoanalytic symbols.

Trevor Paglen’s Vampire (Corpus: Monsters of Capitalism), “Adversarially Evolved Hallucination” (2017). Courtesy of the artist and Metro Pictures, New York.

The image of the vampire is especially creepy. How did that come about?

That comes from a training set made out of images of monsters that have historically been allegories for capitalism. Once I train it, instead of it looking around the room and identifying a vampire, I’ll say, “Draw me a picture of a vampire.”

So you can see what I’m getting at there: What are the politics of recognition, the politics of building any kind of taxonomy? There are always value judgments. When you teach a machine to recognize things, you’re always also teaching it to not recognize things. So one of the bigger questions is, how does that play out in terms of society?

Vision is one of the ongoing themes in your work. I loved a moment in the interview with Lauren Cornell in a new book on your work from Phaidon in which you talked about looking at the night sky. Your mind is capable of looking at the night sky differently because of all these metaphors about surveillance and satellite technology, systems of control and surveillance, versus purely “meat vision,” the way the unaided eye might see the night sky. Have all of your investigations changed the way that you think about meat vision as well?

I’ve been thinking a lot about what those two different forms of perception are. What’s different about human perception is ultimately that it is much more frangible. René Magritte has become one of the key figures of machine learning for me. I can look at an apple and say “This is not an apple,” and then you, as a viewer, are going to say, “That’s an apple, but he’s saying it’s not an apple!” So then you say, “Oh, it’s a painting of an apple!” You can question the relationship between a representation and a thing. Those are always up for grabs in human perception.

If I said to you, “Brian, I’m an octopus now, and I want you to take that seriously,” you could theoretically do that. Those kinds of things are precisely what AI and machine vision cannot do. An AI system can never break the rules that it has been programmed to use, whereas we break the rules all the time.

And that’s not a trivial thing. This is directly related to things like social justice. Magritte saying “This is not an apple” reverberates in Civil Rights-era protesters saying “I am a man,” or women saying “We are equal and want equal pay,” or queer activists saying “I’m queer, deal with it,” or trans activists saying “I’m neither man nor woman, but I’m still a person.” Contesting meaning is a very human activity, and always a political activity. So when you’re looking at these machine vision systems, politics of meanings and taxonomies are hard coded into them. And they’re very strict. That’s a big difference and that really has enormous potential consequences for how the world works.

Aerial photograph of the National Security Agency by Trevor Paglen. Commissioned by Creative Time Reports, 2013.

Speaking of rules, let’s talk about some of the rules you have for yourself. Some of the works you became known for early on were photographs of hidden government and military sites. As far as I know, part of the rules you had in creating these works was that you stay on the right side of those “no trespassing” signs. Do you ever try to cross the lines or are you actually devoted to staying on the legal side of those lines?

I’m very dedicated to staying on the legal side of those lines. When you get around these very sensitive spaces, what is strictly legal isn’t what governs these types of places. Guantanamo Bay is the perfect example. It’s under military jurisdiction but is an extra-legal space. So is, I would submit to you, a lot of the criminal justice system. Which sounds paradoxical, but I think that’s true.

A lot of what I do in terms of walking up to those lines but not crossing them is predicated on the fact that I’m a white guy who is relatively well educated and who has a robust support network if something were to happen to me. I would not do a lot of that kind of work if I didn’t have that kind of protection or privilege. That said, I do think that the right to make images is an important right, and an important right to insist upon. Every amateur photographer will tell you about encountering police while they’re photographing, say, the Brooklyn Bridge. So when you’re doing photography, you’re not only making images, you’re also insisting on your right to make those images. For me, that’s a part of the practice as well.

Some of those early photographs are intentionally blurry. In your photographs of Area 51, for example, you show heat waves rising in order to not give the viewer the clarity that is typically desired in these types of surveillance photos or the photographs in an exposé. The machine vision images are inscrutable in a different way. By contrast, sometimes you literally make things visible—you took an aerial photograph of the headquarters of the National Security Agency, which you were authorized to take and distribute after the facility had not been photographed in decades due to restrictions placed by the government. So can you talk about your uses of clarity and obscurity?

I think of clarity and blurriness as almost epistemological genres. They do different kinds of work. When you see something that’s really clear, you imagine that you can understand it. Seeing something very blurry forces you to confront that you don’t really understand the thing. You have to imagine something into the image, because it doesn’t speak for itself. Probably the most photorealistic images that I’ve made are images of beaches—places where the NSA is tapping transcontinental fiber optic cables. Everything is clear, but the irony is that the thing I’m photographing is actually invisible.

On the other hand, I might take a picture of a secret military base, or of something that’s going on inside of a neural network, and the aesthetic is much more impressionistic, and that makes the viewer question what you think you know, or question how to read that image. Those different strategies can do different kinds of work. I’m just promiscuously borrowing from different philosophies, different histories of art, to try to speak as precisely as I can.

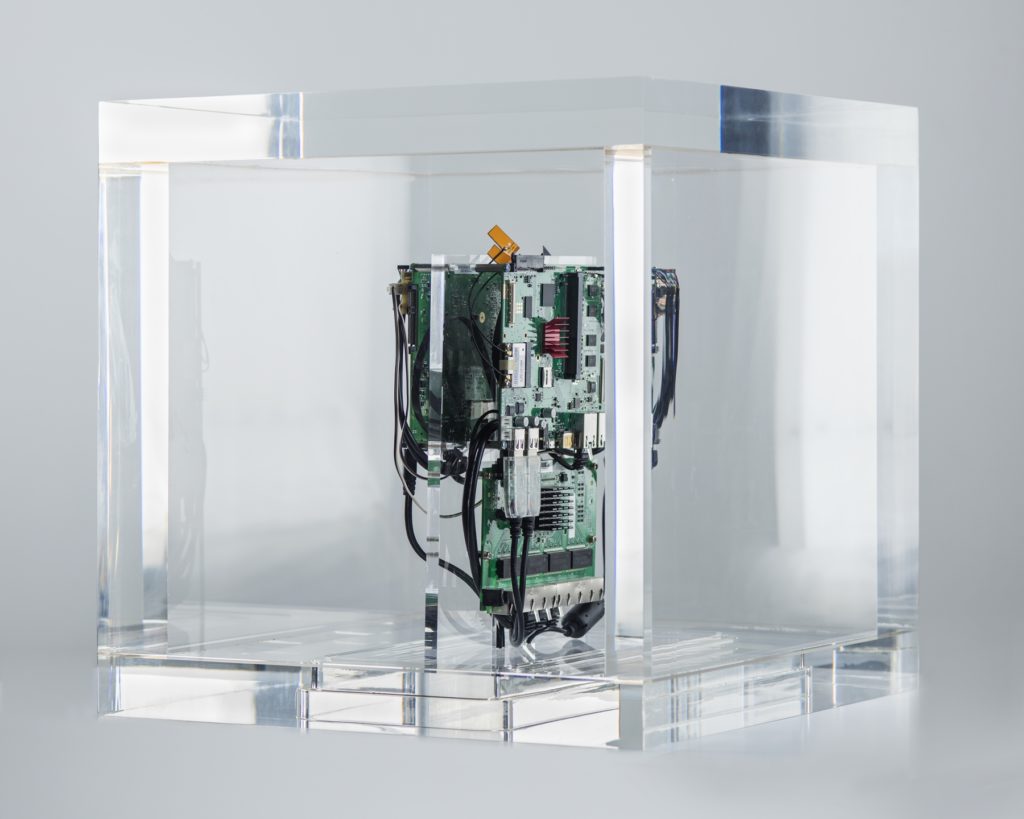

Trevor Paglen’s Autonomy Cube (2015). Courtesy of the artist and Metro Pictures, New York.

Let’s talk about your sculpture Autonomy Cube, which creates an invisible wifi network. You’ve said that you hope to put that piece on view at museums, which, you point out, have become another place where the data that travels across the institutions’ networks is collected and sold. That highlights how the gathering of our data has become such a given that not engaging in that sort of data collection seems inconceivable, even if the museum’s motives are to serve the visitor in and optimize their experience. By encouraging the institutions to put this invisible network into effect, you’re urging them to reject their place in these regimes, right?

One of the reasons I think museums are valuable institutions is the same reason why libraries are valuable. A good museum should have a civic mission. It should not have a corporate mission. It should not be in the business of making money. It should be in the business of providing public service. Most people who work in museums have that attitude, so it’s actually been well received. I thought, “No one is ever going to show this!”, but I’ve been met with the opposite reaction. There’s a huge waiting list to show the piece. When you point out that you don’t actually have to collect all of the data all of the time, and that doing so might actually undermine their own mission, people in the arts generally are very receptive to that idea. But I think you’re right that we’ve been so inculcated by living in the world of Facebook and Google that we’ve to some extent lost the ability to imagine what alternatives would look like.

You’ve said that art can help us see the moment we’re in and to imagine a different world. This made me think about science fiction, which shows us the ways our world could be different. The television show Black Mirror imagines how technology might change our world in dark ways. Do you see any parallel between what you’re doing and science fiction?

That’s a different strategy. I do think that things like Black Mirror are valuable. But I’m not into the aesthetics of shock. I think that one can have much more nuanced takes on that. I think ultimately, what art can do is two things. It can show you some of the mechanics through which the world is constructed and show some of the underlying political, economic, social, cultural relationships that are bearing down on our everyday lives, that have structured society in various ways, at the exclusion of other kinds of ways. It can also help denaturalize these things, help us realize that there is nothing natural about the world in the way that it exists now, and if there’s nothing natural about the way it exists now, then we can imagine alternatives. That is something that artists have always done—and it’s also something that civil rights activists have always done, that feminists have always done. This is the project of a more just world, to try to understand the mechanics through which the world is made unjust and to point out that those mechanics could be different. The rules could be different.