Op-Ed

Text-to-Image Generators Have Altered the Digital Art Landscape—But Killed Creativity. Here’s Why an Era of A.I. Art Is Over

A.I. researcher and Playform founder Ahmed Elgammal on how A.I. art went from innovation to limitation.

A.I. researcher and Playform founder Ahmed Elgammal on how A.I. art went from innovation to limitation.

Ahmed Elgammal

Everybody is now talking about generative A.I., and “A.I. Art,” about the dawn of a new era of creative A.I. that will take the jobs of artists. We see a huge backlash from artists and the art community. Yet the truth is quite the opposite: the era of “A.I. Art” may actually already be over.

What exactly happened? To start with, let me clarify what I mean by “A.I. Art.”

A.I. does not make art; it makes images. What makes these generated images art is the human artists behind A.I.—the artists who fed data to the machine, played with its knobs, and curated the output. So, I am using the term “A.I. Art” to talk about human art that uses A.I. as part of the creative process, with various degrees of autonomy. We are entering an era of massive use of such tools. However, the era when these tools struck a spark of artistic genius might be behind us.

What makes that spark in art? When Picasso painted Les Demoiselles d’Avignon in 1907, it was controversial, rejected by his close circle of friends. Even George Braque, Picasso’s colleague in Cubism, disliked it. Not until 1939, when the painting was shown at the MoMA, did it gain public acceptance and recognition as a herald of Cubism. Jonathan Jones wrote in The Guardian on its centennial: “Works of art settle down eventually, become respectable. But, 100 years on, Picasso’s is still so new, so troubling, it would be an insult to call it a masterpiece.”

Picasso’s Les Demoiselles d’Avignon (1907) on display at MoMA in New York. Photo: Stan Honda/AFP via Getty Images.

The role of troubling challenge in the evolution of art can be explained well by a theory from the psychology of aesthetics pioneered by Colin Martindale in his 1990 book, The Clockwork Muse. He suggested that the main force behind the evolution of art is that artists innovate to pull against habituation. However, if artists innovate too much, their art will be too shocking and the audience will not like it. Good artists are the ones who find that sweet spot between being innovative but not too shocking. Great artists are the ones who push farther.

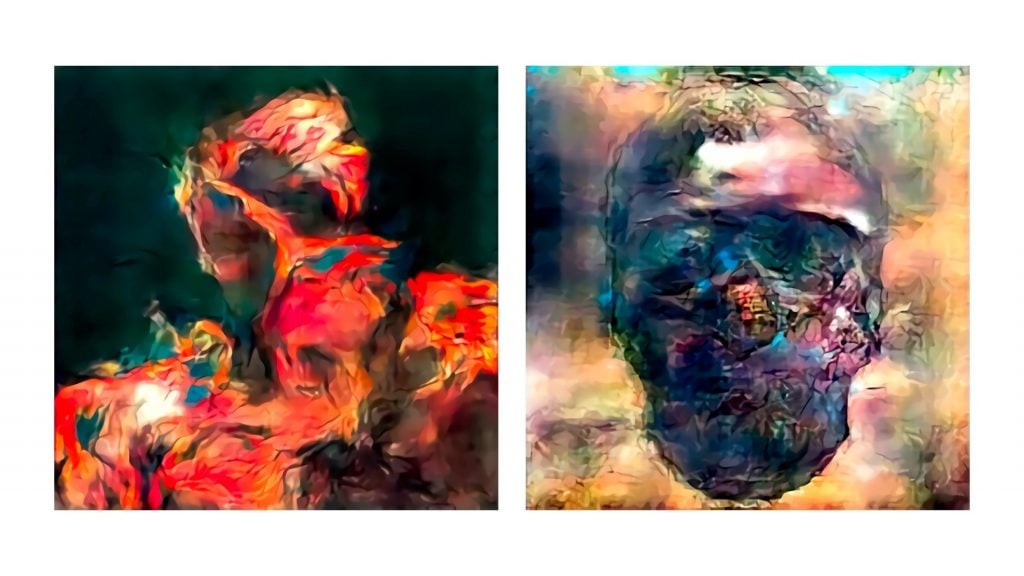

Can A.I. push beyond good to great? When Generative Adversarial Networks (GANs) came around, some artists took notice of this new A.I. technology. You can train these models on lots of images, and they can generate new images for you. In 2017, when we trained a GAN on classical portraits from Western art, it created some troubling, deformed portraits, which reminded me of Francis Bacon’s 1963 portraits of Henreitta Moraes. However, there is one fundamental difference: Bacon had the intention of making his portrait deformed, while A.I. simply failed to make a portrait as was instructed.

Francis Bacon, Three Studies for Portrait of Henrietta Moraes, (1963). Photo courtesy Sotheby’s.

With GANs, we entered the era of machine failure aesthetics. Some critics connected that to glitch art. Indeed, the surprise that came with GAN generations made them intriguing for artists. Many in the domain called it the “uncanny valley.”

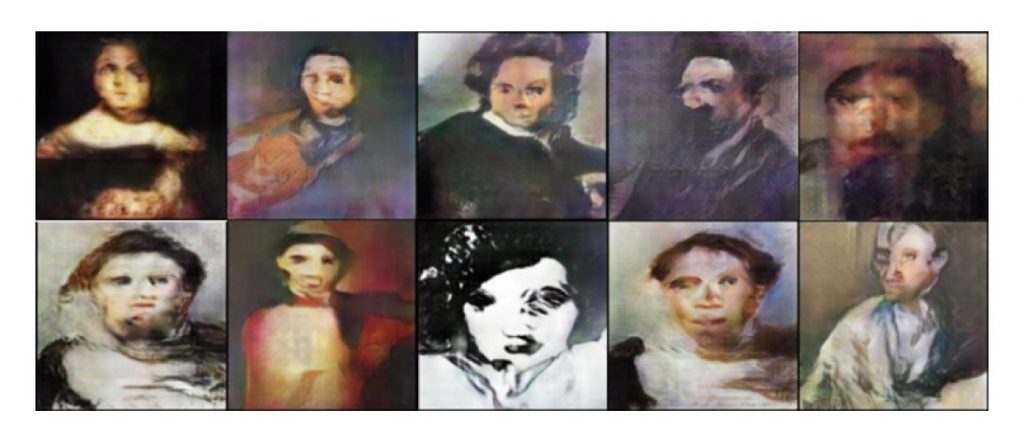

It was this uncanny valley and serendipity that made A.I. art interesting between 2017 to 2020. In 2019, I did a study, with art historian Marian Mazzone, where we interviewed several artists who pioneered the use of A.I. in their process. We found that “artists understand A.I. as a major impetus to their own creative processes.” In particular, artists found A.I. useful in two ways: creative inspiration and creative volume. This creative inspiration was where artists found A.I. to give them sparks of new ideas, new directions, new ways to create their art.

A.I. portraits generated by a GAN trained on classical portraits, 2017. Photo: Ahmed Elgammal.

Unlike the current climate of backlash, A.I. Art was welcomed in the art world between 2017 and 2020.

In October 2018, Christie’s auctioned an A.I. portrait generated by GAN, similar to the deformed portraits mentioned above. Sotheby’s auctioned a piece by artist Mario Klingemann in March 2019. Manhattan’s HG Contemporary had an exhibition showing my own work in February 2019. The Barbican Center in London exhibited different A.I. artists in summer of 2019. A.I. art was welcomed at Scope Miami 2018 and Scope New York in 2019, among other art fairs. The National Museum of China in Beijing has a month-long A.I. art exhibition in November 2019, which attracted one million visitors.

During that time, the media covered A.I. art favorably. The art market welcomed A.I. artists. There were no calls to ban it. So what happened?

Mario Klingemann, Memories of Passersby I (2018). Photo courtesy Sotheby’s.

One fundamental difference between early A.I. models and today’s prompt-based models, is that earlier models could be trained on smaller sets of images. This made it possible for artists to train their own A.I. models based on their own visual references. Today’s prompt-based models are pre-trained on billions of images taken from the internet without artist consent. This comes with loads of copyright issues. Such massive systems wipe the artist’s identity. The difference between my work and your work only depends on which keywords we used in the prompt to steer the system. No wonder the copyright office refuses to copyright art generated by such systems. Capturing artist identity was the main reason photography could be copyrighted in courts in the late 19th century.

Over the last few years, A.I. has been getting better at generating good quality images and photorealistic images. It is also getting better at imitating the data that it is trained on. A new way of interactions has been introduced, mainly using text prompts to control the generation. Nowadays, text prompting has become the dominant way of generating images with A.I. These advances in generative A.I. have made A.I. very good at following our instructions in a carefully crafted text prompt to generate whatever image we want, whether it is a photograph or an illustration, in any genre. The surprise is limited to what variations of our idea we might get. With many iterations, we can get the stunning high-fidelity, high-resolution image we want.

Text-promoting helped A.I. get out of the uncanny valley. But it killed the surprise. This is because these models are trained on both text and images together, and learn to correlate visual concepts with language semantics. This makes the models better at creating figures and imitating styles that can be described in words.

Refik Anadol, Unsupervised (2022). Courtesy MoMA.

But, on the other hand, using language as part of training makes the model very constrained in creating inspiring visual deformations. A.I. now creates its visual output confined by our language, losing its freedom to visually manipulate pixels freely without prevarication from human semantics.

In a sense, A.I. is becoming more like us—no longer able to see the world with an eye that complements or challenges us.

Naturally, A.I. still makes surprising failures in generation. We still get figures with four-fingered hands and three legs. However, these kinds of dumb failures are not necessarily interesting. It is not the kind of failure that caused the uncanny aesthetics of earlier generative A.I. Creative inspiration is not the only thing lost in this new generation of A.I. models relying on text prompts. The main idea of using text to generate images can limit artists. Artists are visual thinkers. Describing what they want using words adds an extra, unnatural layer of linguistic abstraction.

A.I. is becoming a tool for massive image generation, not the exciting co-creative partner that excites artists with new ideas. A.I. is becoming very good at following the rules, but the artistic spark in it is gone. Artists will have to dig deeper, go beyond prompting, and use A.I. differently to find it.

Ahmed Elgammal is an A.I. researcher, professor, and founder and director of the Art and Artificial Intelligence Laboratory at Rutgers. He developed AICAN, an autonomous A.I. artist and the earliest A.I. art generator, and founded Playform, a platform that enables artists to integrate A.I. into their creative processes.