Art & Tech

A New Genre of Bad A.I. Art Takes the Stage: Nature Slop

Navigating the post-Shrimp Jesus world of content.

Earlier this year, web sleuths were puzzling over the flood of A.I.-generated images of Jesus Christ on Facebook. On their own, that’s not so strange. He’s a big, big star. But these particular Christs had their bodies formed of giant living shrimp, Arcimboldo-style—and there were endless variations on this theme. “Shrimp Jesus” became a symbol of so-called “slop”—the kind of A.I.-made content, generated en masse to create engagement, that has filled the web.

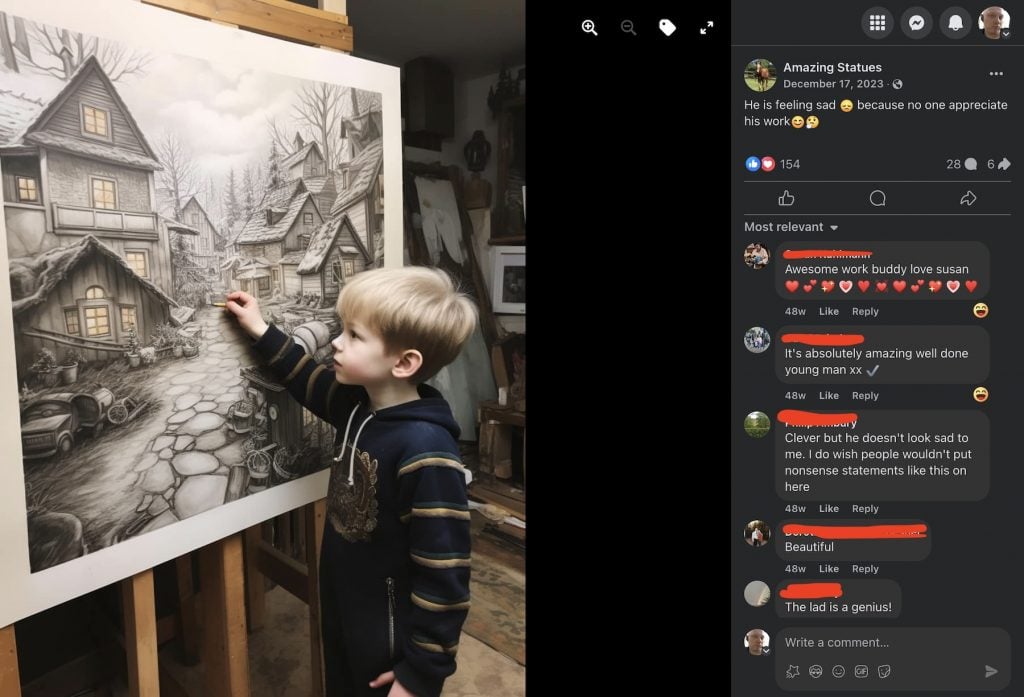

There were other, similarly surreal genres of slop that got attention at the time. My favorite was a trend depicting young children posing with artworks they had made, with a caption to the effect of “no one appreciates his/her art, please like.” The artworks being shown off were always surreally, comically complex.

Screenshot of an A.I.-generated image from the Facebook page “Amazing Statues.”

Journalist Max Read investigated for New York and found that such images were made by a global cadre of content entrepreneurs in places like Kenya and Vietnam, relentlessly workshopping what would rise to the top of U.S. Facebook users’ algorithmically served feed, making tiny amounts of money off the clicks when they hit on a trend that drew eyeballs, via Facebook’s “Performance Bonus” program.

By now, those particular genres seem to have died down (though the X account “Insane Facebook A.I. Slop” is still going strong). I guess there’s a finite audience for the shrimp-based Son of God or pint-sized Michelangelo.

Yet Jason Koebler of 404 Media reported recently that Mark Zuckerberg has been very clear that A.I. content, broadly defined, is part of the future he sees. “I think we’re going to add a whole new category of content, which is A.I.-generated or A.I.-summarized content or kind of existing content pulled together by A.I. in some way,” Zuckerberg has said. “And I think that that’s going to be just very exciting for the—for Facebook and Instagram and maybe Threads or other kind of Feed experiences over time.”

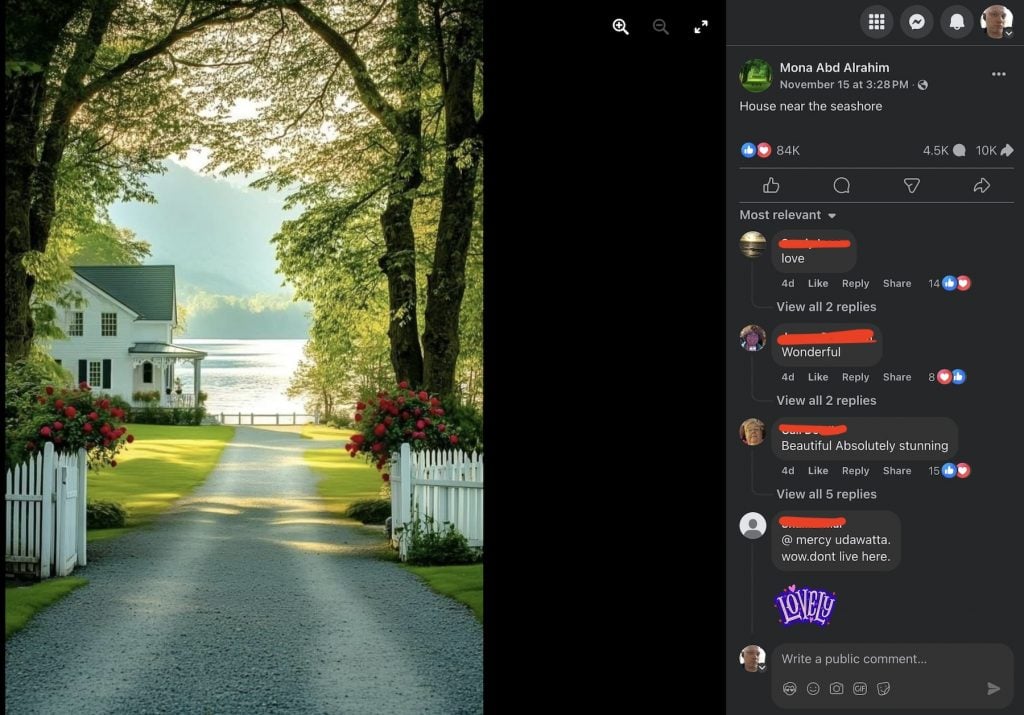

Screenshot of an image from the page “Mona Abd Alrahim.”

I’ve been fascinated enough by the phenomenon to click into many pages of A.I.-generated content. And the more you click, the more you get. As a consequence, I’m forming a picture of one direction this is heading. Let’s talk about “nature slop.”

What my feed now serves up, mercilessly, in lieu of updates from friends—or even the humorously weird Shrimp Jesus style of slop—is huge quantities of autumn foliage, natural wonders, or celestial events from pages with names like Luxury Destinations, Feel the Moment, and Traveling Is My Destiny. Some have the odd distortions of bad A.I., like this one.

Screenshot of an image of an A.I.-generated landscape on Facebook.

But most don’t. They may trigger your “A.I.-dar,” but mostly because they just feel subtly, indefinably too picturesque to be true. And they absolutely are A.I.

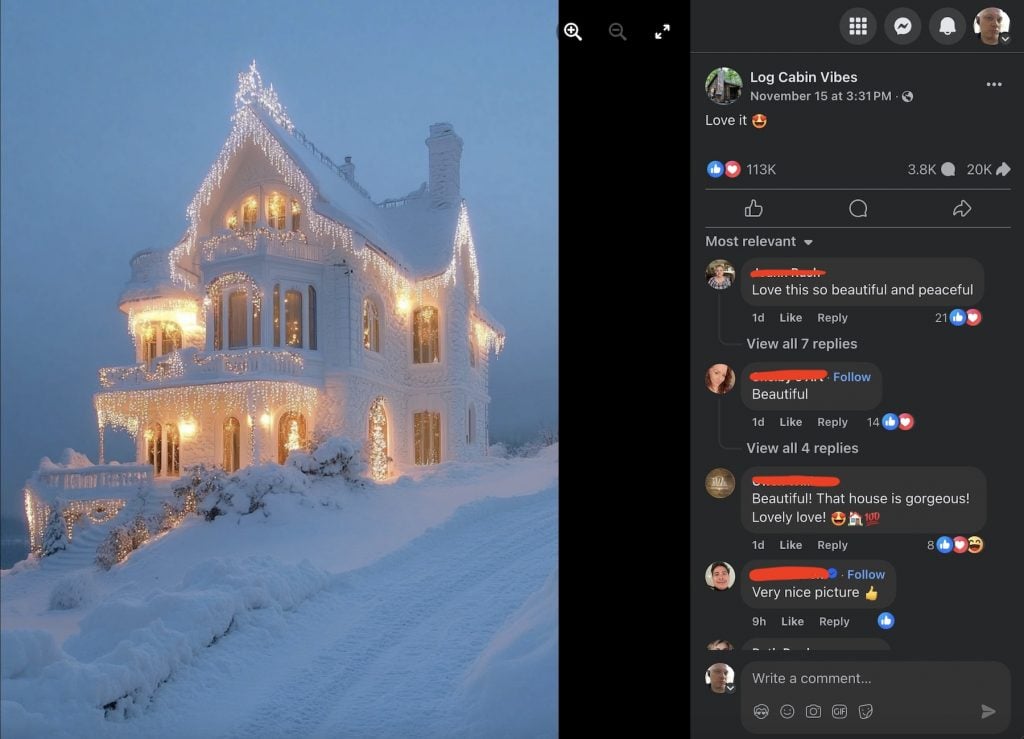

This simulation of beautiful nature is joined by another genre, which you might call “property slop:” cute snow-drenched cottages or cool mansions or aspirational interiors.

Screenshot of an image from the page “Log Cabin Vibes.”

Max Read wrote about how the “sloppers” he talked to sometimes made things deliberately weird or off-putting, because that caused people to click, and argue with each other about how it couldn’t be real, and that in turn boosted the posts.

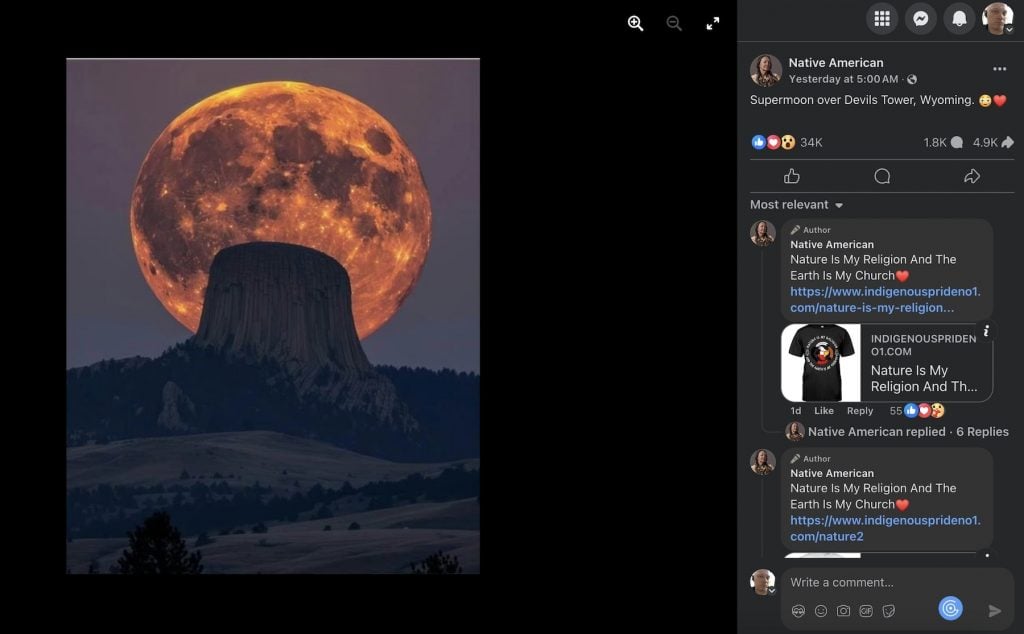

Certainly, some of this “nature slop” exaggerates certain “wondrous” qualities—the size of a moon as it rises, the colors of the leaves, the drama of a landscape.

Screenshot of an image from the page “Native American” (this one also seems to be selling T-shirts).

But, again, most “nature slop” could be real photos of striking places. Indeed, the appeal of the particular genre they are simulating depends very specifically on your brain processing a photograph as being valuable for having some connection to a unique reality.

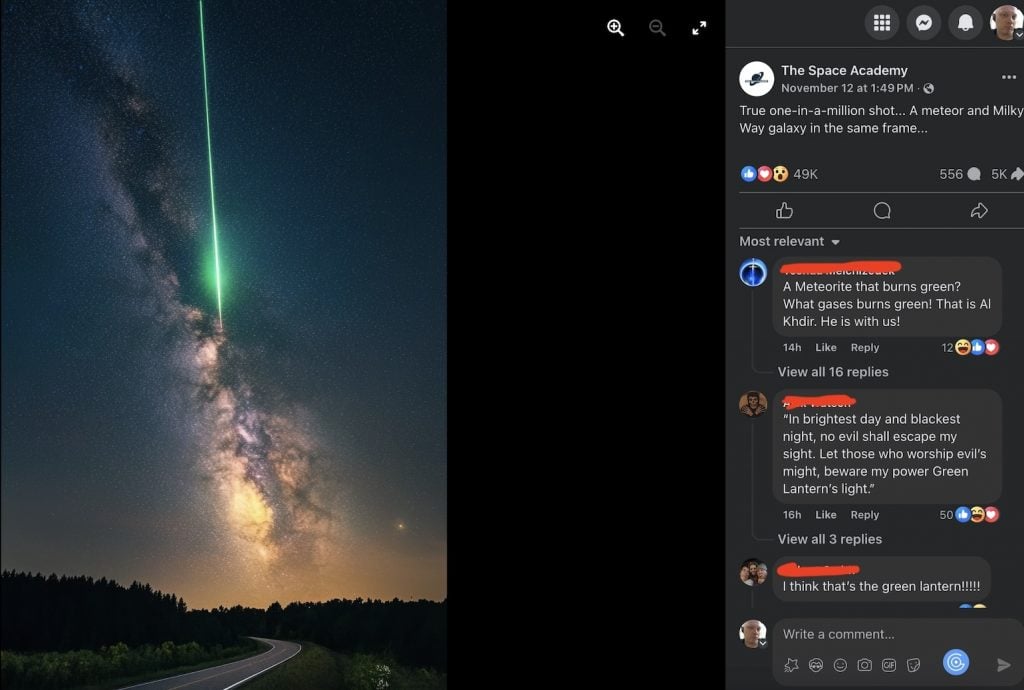

Thus, for instance, the caption on a picture in a page called The Space Academy boasts that it is a “true one-in-a-million shot,” even as its image of an electric green meteor plunging through the atmosphere is A.I.

Screenshot of an A.I.-generated image from the Facebook page “The Space Academy.”

How much of the interest in this content is real and how much of it is just more fodder for the “dead internet theory“? My guess is that there are thousands of A.I. accounts, commenting on one another to drum up attention. When the gambit works, it draws real fans of this kind of content. Who in turn are joined by another group clicking in to gawp at rubes interacting with fake stuff or to try to warn people it is A.I.

To be fair to the thousands of people earnestly commenting “amazing” or “I want to be there” next to this kind of content, I suspect that most just don’t think about it too much. They likely consume it in a distracted, “beyond-real-or-fake” way, just thinking “that’s nice.” The picture is mainly a warm, pleasant stimulus filling a space where actual communication with friends used to happen on now-depopulating social media.

Screenshot of an image on the page “Amazing US View.”

Don’t get me wrong: I definitely find something creepy about how this corner of the attention economy incentivizes the systematic blurring of the lines of what is real. But I worry just as much about the effects of this constant drip of nature slop on the skeptics who are being primed to view everything through the lens of cranky paranoia. That includes myself.

I clicked on the “heart lightning bolt” pic that’s the lead image of this post, which is A.I generated., on a page called Astronomy Lovers. Afterwards, I found myself scrolling to another image, this one of a swirling alien planet.

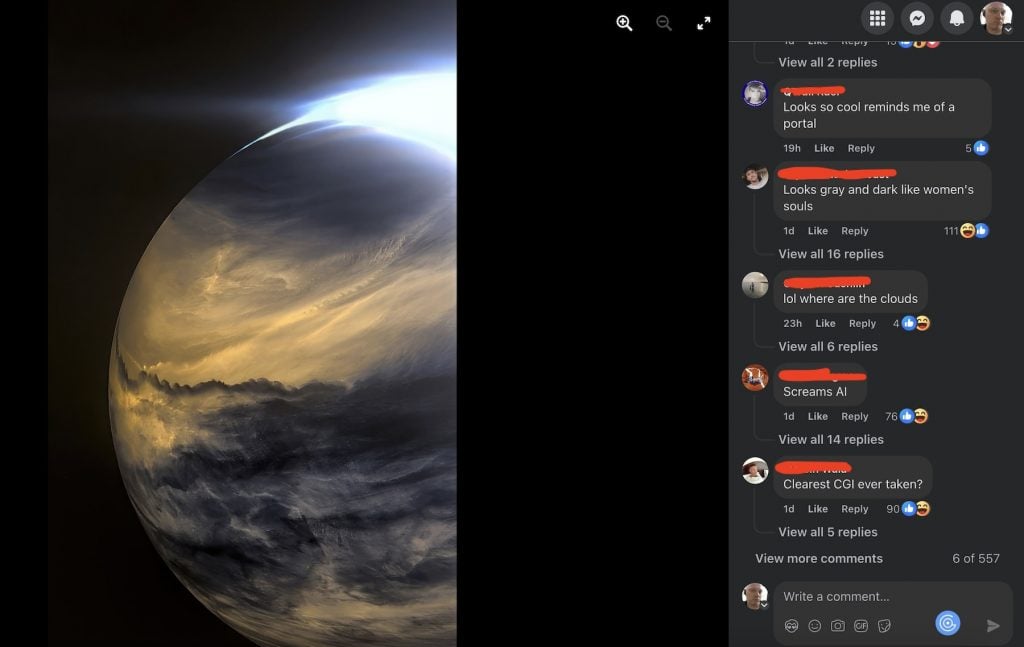

Screenshot of an image on the page “Astronomy Lovers.”

“Fake!” I thought.

“Screams A.I.,” said a top comment.

“Clearest CGI ever taken,” says another, riffing on the caption’s claim that it was the “clearest image of Venus ever taken.”

But the image came back as real when I ran it through my preferred A.I. image detector. And an image search reveals that the picture in question was indeed an infrared photo taken of Venus by Japan’s Akatsuki space probe.

All that ingenuity and expense to go to space and unlock the wonders of the cosmos, a true scientific and technical achievement, something that could inspire a new generation of scientists… and back on Earth we are innovating the technology to make sure we can’t be moved to care.