Art & Tech

Demand for a New Tool That Poisons Generative A.I. Models Has Been ‘Off the Charts’

Nightshade was downloaded more than 250,000 times over five days.

A new, free tool designed by researchers at the University of Chicago to help artists “poison” artificial intelligence models trained on their images without their consent has proved immensely popular. Less than a week after it went live, the software was downloaded more than 250,000 times.

Released January 18, Nightshade is currently available for download on Windows and Apple computers from the University of Chicago website. Project leader Ben Zhao, in remarks to VentureBeat, said that the milestone number of downloads was reached in just five days—a surprising outcome though the team expected high enthusiasm. The team did not geolocate downloads, but responses on social media indicate it has been downloaded worldwide.

“I still underestimated it,” Zhao said. “The response is simply beyond anything we imagined.”

Artnet News has reached out Zhao, a computer science professor at the university, for comment but did not hear back by press time.

“The demand for Nightshade has been off the charts,” the team said in a statement on social media. “Global requests have actually saturated our campus network link. No DOS attack, just legit downloads.”

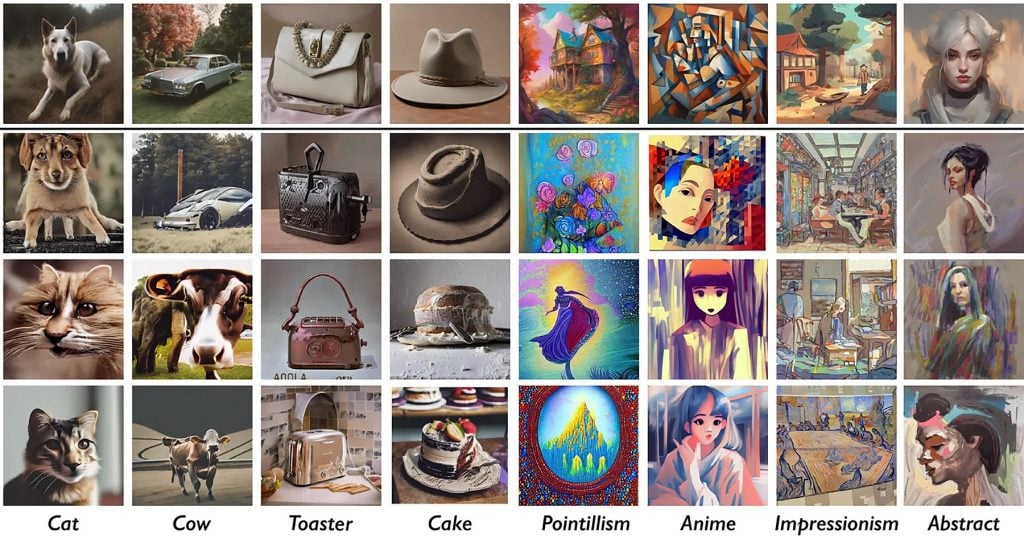

Nightshade functions by “shading” images at the pixel level to make them appear entirely different, causing any images generated by an A.I. model to be flawed and affecting how a machine learning algorithm views them. It is a sister product of Glaze, which seeks to cause A.I. models to misidentify an art style and replicate it incorrectly. The team is currently working on combining the tools.

“If you are an artist whose art style might be mimicked, we recommend that you do not post any images that are only shaded but not glazed,” the team wrote on its website. “We are still doing tests to understand how Glaze interacts with Nightshade on the same image.”

For now, artists are encouraged to follow the process of using Nightshade on an image first and then using Glaze for protection. Though, this process might increase the level of visible artifacts in the work.

The team gave a couple other words of warning on social media, including that it’s “probably not best idea to announce an image as poison, kinda defeats the purpose.” The program is also very memory intensive.

The user interface for the program is not visually impressive but intuitive. An artist is easily guided to selecting what image to shade with two mandatory settings to adjust and an optional third, before the output is rendered in between 30 and 180 minutes depending on settings selected.

Some artists on social media have even suggested poisoning everything a person posts online including “daily photos about your meal, family, puppies, kittens, random selfies and street shots.” Other platforms, such as the social and portfolio application Cara currently in open beta, will soon integrate with Nightshade.