Analysis

How to Speak Robot: As the Art World Flirts With A.I., Here Is a Glossary of Terminology You Need to Know

Allow us to translate for you.

Allow us to translate for you.

Tim Schneider

A version of this story first appeared in the spring 2020 Artnet Intelligence Report and is part of our cover package on Artificial Intelligence. For more, read our thorough breakdown of how A.I. could change the art business and our survey of the challenges of A.I. art authentication.

When the conversation turns to artificial intelligence, the jargon starts to fly fast and furious. Here are the terms you need to remember to keep up.

ALGORITHM – a command or sequence of commands detailing how to complete a specific task. Although the term is now most often applied to computing, the instruction manual for an IKEA table is technically just as much an algorithm as a line of code in a programming language.

NEURAL NETWORK – a configuration of algorithms, loosely modeled on the human brain, that automatically analyzes a data set to deliver an output. The algorithms consist of interconnected processing nodes (also called artificial neurons) usually organized into multiple successive layers (see “deep learning”).

MACHINE LEARNING – a process in which neural networks search large amounts of data looking for patterns—without explicit direction from humans on how to accomplish the task. The “learning” aspect refers to the fact that neural networks continually refine their algorithms as they are exposed to more data.

TRAINING DATA – a set of pre-labeled examples (for instance, images defined as “dog”) repeatedly fed into a neural network to fine-tune its algorithms to detect particular patterns. In theory, the greater the quantity, quality, and diversity of the training data, and the more times that data set is processed by the neural network, the better the neural network will perform on unlabeled data after completing its training.

DEEP LEARNING – machine learning carried out through a so-called “deep” neural network boasting several interconnected layers of processing nodes. Each successive layer builds on the previous one to make finer and finer distinctions within the data—for instance, from recognizing the outlines of a furry object, to recognizing broadly doglike features, to recognizing specific features of specific dog breeds.

Trevor Paglen: From ‘Apple’ to ‘Anomaly’, installation view. The Curve, Barbican. © Tim P. Whitby / Getty Images.

COMPUTER VISION – also called “machine vision,” the process by which a computer uses machine learning to identify specific objects, items, or people in unlabeled images. Computer vision is the technology through which Facebook can automatically tag users and their friends in new photos uploaded to the platform.

ARTIFICIAL INTELLIGENCE (A.I.) – the discipline of data science in which deep neural networks independently generate new solutions to a given problem by extrapolating from machine learning. A.I. solutions often feel discomfiting to humans since algorithms may learn lessons their developers did not anticipate based on their (often biased or otherwise flawed) data and so tend to accomplish tasks in ways humans would not. Most infamously, algorithms tasked with rating human beauty have in the past downgraded people of color precisely because their training data included disproportionately high volumes of white folks.

GENERATIVE ADVERSARIAL NETWORK (G.A.N.) – an A.I. system in which two neural networks compete against each other to create artificial outputs that could pass for “real” ones. G.A.N.s consist of a generator algorithm, which produces new data, and a discriminator algorithm, which assesses whether or not incoming data is machine-made. As a whole, a G.A.N. succeeds when its generator component manages to fool its discriminator component.

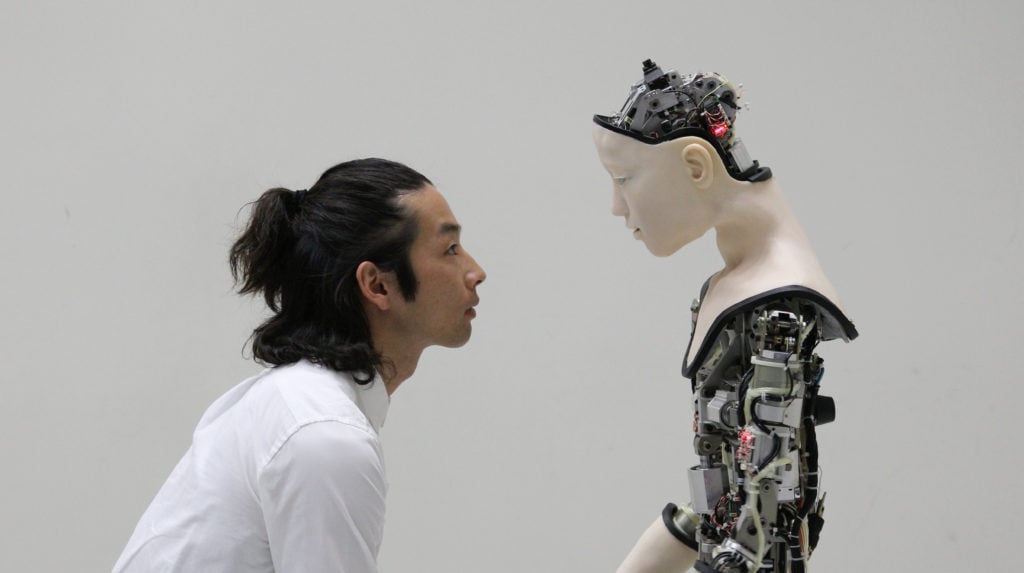

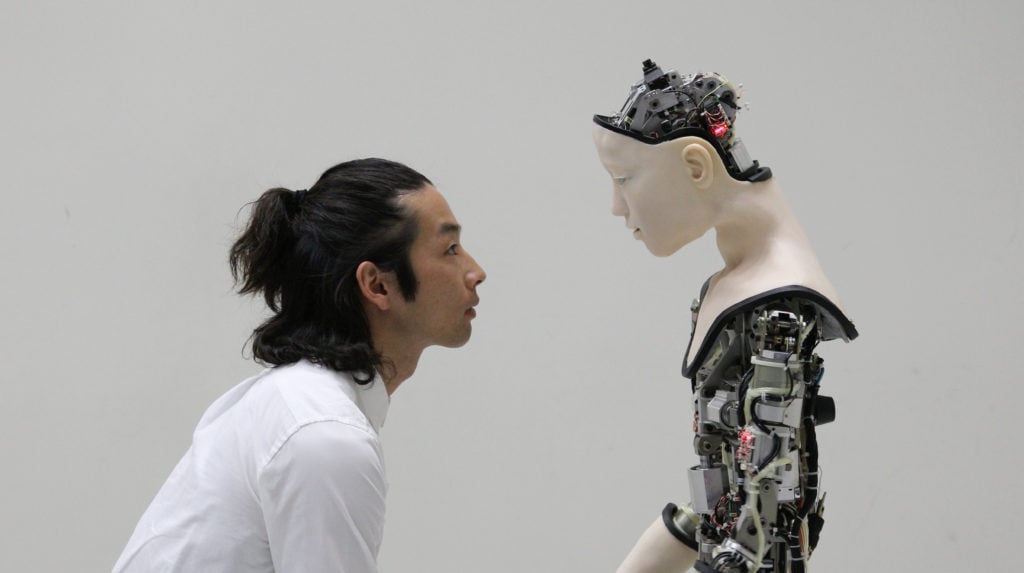

ARTIFICIAL GENERAL INTELLIGENCE (A.G.I.) – the evolutionary end point of A.I. popularized in science fiction, best described as a thinking (and perhaps even feeling) machine that can establish and pursue its own goals. A.G.I. is still only a figment of our collective imagination—and thankfully so, if you fear the sentient, murderous HAL 9000 in Stanley Kubrick’s 2001: A Space Odyssey—while machine-learning and task-directed A.I. are already reshaping more and more aspects of our lives every day.